OpenAI is looking to release a new deepfake detection tool for independent researchers and academics to fight AI-powered disinformation ahead of the 2024 US Elections.

The AI startup hinted on Tuesday at plans for a DALL-E 3 deepfake detection to fill "a crucial gap in digital content authenticity practices."

(Photo : Stefano Rellandini/AFP via Getty Images)

The tool will feature an analytics tool to help researchers analyze and identify the likelihood of an image originating from an AI-image generator.

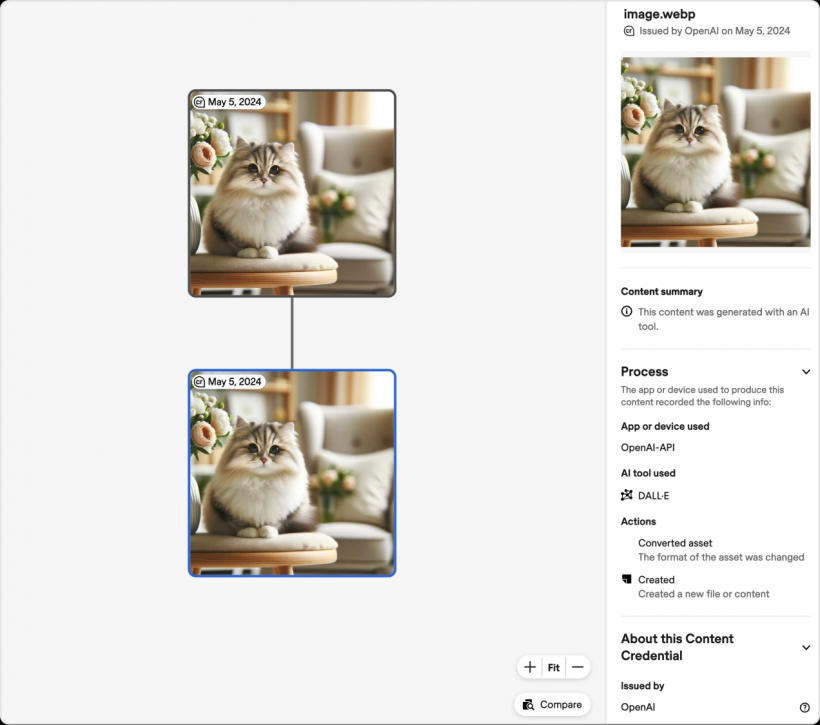

(Photo : OpenAI)

This will be in addition to the introduction of "tamper-resistant watermarking" features to help social platforms and researchers identify the media content as digitally manipulated.

The release of the deepfake detector coincides with OpenAI joining the Steering Committee of C2PA, the coalition group between tech giants and news outlets fighting against "misleading information online."

The rollout of the new AI tool also follows OpenAI's earlier commitments to provide safety measures from its technology being abused to spread political misinformation.

Also Read: AI Image Generators are Spreading Election Misinformation, New Study Finds

OpenAI Technology Criticized for Anti-Misinformation Policies

OpenAI may have increased efforts to crack down against prevailing misinformation generated from its platforms, but most of the work is still dependent on third-party researchers and experts rather than internal acts.

A study from the Center for Countering Digital Hate last March highlighted this issue, noting that OpenAI's ChatGPT Plus still produce inaccurate images despite promises to improve its systems.

The study noted that OpenAI and other AI companies "failed to prevent the creation of misleading images of voters and ballots," including generating images of notable political figures.

With the company continuously improving its technology to produce more realistic-looking content, as Sora AI has shown in its demo, experts

In its defense, OpenAI has promised several times to improve its "red-teaming" process to detect vulnerabilities and loopholes in its products that bad actors could abuse.

Congress Moves to Curb AI Disinformation Amid Regulation Concerns

With the US Elections looming closer and online disinformation running more rampant, lawmakers have started pushing for more laws to hamper AI's impact on information democracy as moves towards a standardized regulation remain a faraway dream.

Congress has already filed a bill to require social media platforms and AI firms to add watermarks to AI-generated media to prevent bad actors from using it to deceive the public.

Another proposition is looking to mandate companies to share information about their future AI development projects to identify vulnerabilities during the early stages.

Related Article: New Bipartisan Bill Seeks to Require AI-Generated Media to be Properly Labeled