The controversial Kids Online Safet Act is receiving new traction ahead of the Senate deliberation following a refreshed version of the proposal submitted this Thursday, Feb. 15.

Over 60 senators now expressed support for the bill, enough to pass the Congress, after concerns about hampering freedom of speech from marginalized groups were smoothed out.

The new revision now limits the "duty of care" language in the bill to only prevent specific harmful content, like mental health disorders, physical violence, and sexual exploitation.

Previous oppositions of the bill, like the digital advocacy group Fight for the Future, agreed to the changes that "significantly mitigate the risk of it being misused" to stifle young people's access to online communities.

Some KOSA Concerns Addressed

A primary concern on previous versions of the bill surrounds the risk of the KOSA "being weaponized by politically motivated" attorney generals to "target content that they don't like."

This is referring to the increasing opposition the LGBTQIA+ and pro-Palestine communities are receiving online as more Conservative or traditionalist accounts attack them.

If the KOSA bill was enacted, attorney generals and states could punish social media platforms for spreading harmful content across the site, risking children being exposed to the posts.

The bill earlier received heavy backlash from people it claims to protect due to vague descriptions on how the enforcement will apply.

For now, Fight for the Future Evan Greer pointed out that a clearer definition of the "content-neutral manner" is the proper next step for the bill's author Sens. Richard Blumenthal and Marsha Blackburn to take.

US Gov't Moves to Regulate Social Media for Children

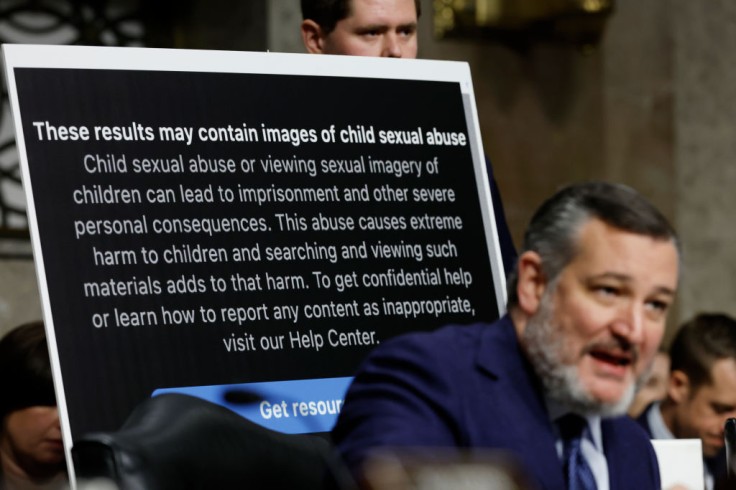

The latest hearing comes after the Senate launched its recent investigation on big social media companies over malpractices in handling its underage users.

The previous hearing also raised the topic of KOSA as more social media companies like X (formerly Twitter), Microsoft, and Snap endorsed the bill.

Problematic and harmful content remains a problem on the internet with over 33.26 million posts on Meta and TikTok platforms alone were considered "problematic content directed at young users."

Many social media platforms have since implemented their child protection policies on the platform, including greater controls for parents over their children's social media feeds.