The AI race between Google, Microsoft, and OpenAI has been intense, to say the least. The three have been announcing a great number of new AI advancements every now and then to one-up each other, with Google on one side and Microsoft as well as OpenAI on the other.

Google's Latest Card: PaLM 2

The tech giant has revealed a lot of new prospects during its Google I/O 2023 conference, and among them was the PaLM 2, which is the next-generation large language model that is also meant to compete with OpenAI GPT-4 technology.

At the event, Google also announced that the AI technology is already being used to power 25 of its products and services, which includes its own AI assistant, Bard, and Google Workspace. There are those saying that PaLM 2 still performs worse than GPT-4 and Bing.

For instance, a Wharton professor who writes about AI, Ethan Mollick says that PaLM 2's performance was worse than its main rivals GPT-4 and Bing on account of informal language tests, as mentioned in Ars Technica.

There's also no way to determine which one, GPT-4 or PaLM 2, has more parameters since both companies have not revealed that information. In contrast, OpenAI revealed that GPT-3 has 175 billion parameters. There's also the issue of copyright.

The company claims that the PaLM 2 was trained on several sources such as web documents, books, code, mathematics, and conversational data. Without the disclosure of the sources, some might assume that Google used copyrighted material to train the AI.

This has also been an issue with other AI companies, especially image-generating AI. The AI services have been accused of pulling copyrighted media from the web in order to create the images that a user has prompted. It's possible that Google has done the same with PaLM 2.

Read Also : Google Says Bard was Not Trained Using ChatGPT

What Can Google's PaLM 2 Do?

Google claims that it excels om advanced reasoning tasks such as code and math, classification and question-answering, translation, and multilingual proficiency. This was made possible through various improvements in the model.

The mentioned upgrades include compute-optimal scaling, an improved dataset mixture, and model architecture improvements. These research advancements in large language models are put together to create a much smarter AI than its predecessor.

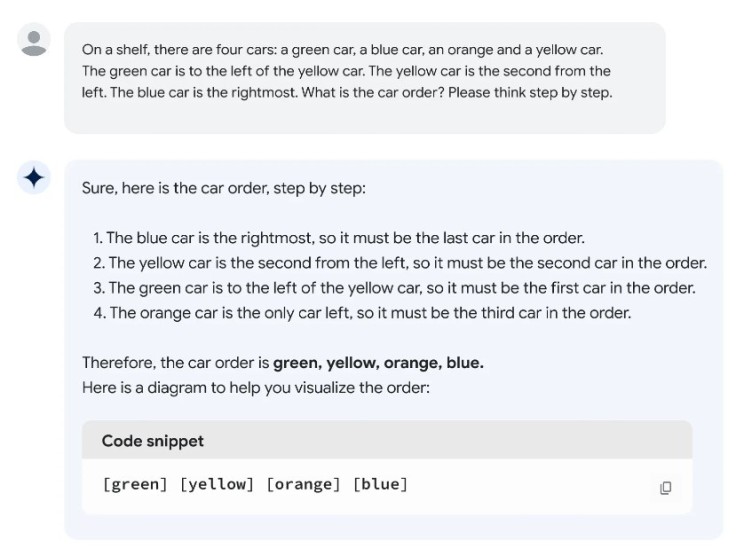

For instance, Google presented an example of reasoning where a user asked PaLM 2 a complex question and stated that it should think step by step. The AI then provided an enumeration of its response that shows a comprehensive answer.

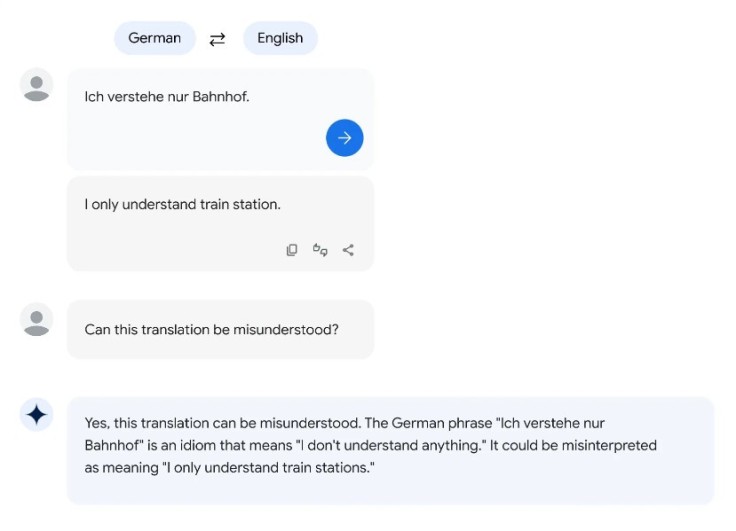

Those who have tried using Google Translate know that it can be unreliable sometimes and may provide translations that can be misinterpreted. With PaLM 2, it would even provide the potential misunderstanding that the translation could result in.

It also has coding capabilities that can be used by developers to make their tasks easier. For instance, you can ask it to fix a bug and use its multilingual translation capabilities to add comments in certain languages, which was shown in Google's blog post.