Many of us already enjoy the harmless functions of AI since they make our lives easier. Others, however, see the opportunity to use these tools and services to conduct fraudulent activities and spread misinformation. A new tool is now within our reach, and it could make the problem much worse.

Animate Anyone

There have been a slew of deepfake images and videos circulating around the internet ever since generative AI gave us tools to make them. People have had their voice and likeness cloned without their consent, usually for the creator's own gain.

Now, even those who are not as tech-savvy in AI generation can create deepfakes. A tool called Animate Anyone won't require you to apply complex edits to a photo to make it move realistically, which, as anyone can predict, can cause a whole new set of problems.

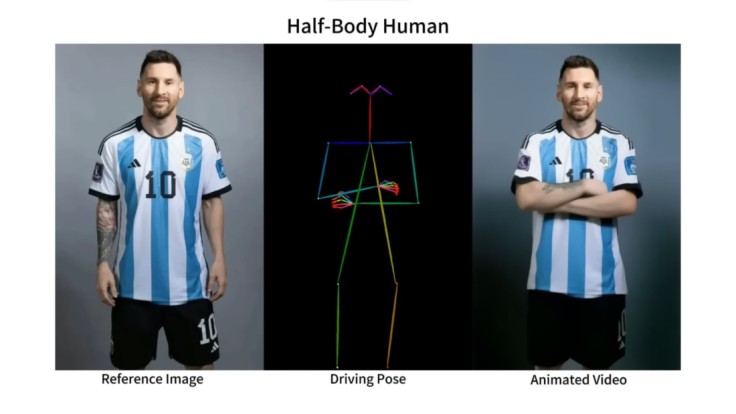

The new generative AI tool was developed by researchers from Alibaba Group's Institute for Intelligent Computing, as reported by Tech Crunch, and it attaches a base skeleton to the subject in the photo, which in turn will puppet its movements realistically.

Based on the demonstrations shown, it doesn't look like those awkward animations you see through ads, where the AI tool only affects the mouth part of the image, making it obvious that it was AI-generated. Animate Anyone operates on a different level.

The movement is smooth enough that if you don't pay attention to the minuscule details, you'd think that it is real. People on the internet aren't usually as critical of content, so it will most likely deceive a lot of people before it's taken down.

Previous AI animation tools tend to go overboard with their hallucinations. In case you don't know, AI "hallucinates" scenarios to determine how the subject would look in different positions. Since they're not entirely accurate, it makes editing more obvious.

As shown in Animate Anyone's video, they seem to have lessened the obvious flaws that other AI tools seem to produce. Some examples still show inconsistencies on the subject's face, but most are still impressive enough to seem real.

The fashion model, for instance, changes stances flawlessly. It's hard to determine whether it's a fake since even the clothing has its own effects. The physics of a dress flowing with movement is accurate, along with the waving of the sleeve as the arm moves.

How It Can Be Dangerous

Bad actors could already do so much with lesser versions of Animate Anyone, so you can imagine what they can do now with a more advanced AI tool. Several actors have already expressed their concerns over deepfakes in the last year alone.

Tom Hanks, for example, had his likeness stolen and used on a dental plan ad. The actor clarified on Instagram that it was not him and that he had no ties with the dental plan being advertised. "Avengers" star Scarlett Johansson also experienced the same thing.

The only difference is that the actress had her voice cloned, as per The Verge. Unlike the "Forrest Gump" actor, Johansson was not letting the AI app get away with it as she filed a lawsuit against the developers.