GPT Store, OpenAI's dedicated online store for custom ChatGPT programs, is now filled with "girlfriend" bots just days after the platform first launched.

Despite clear rules against "romantic companionship" on the platform, the majority of the custom-made "GPTs" that appeared on the site are "AI girlfriends" and "sweethearts."

Several chatbots have over 500 conversations.

Several "AI boyfriends" also populated the platform, including a character AI for YouTuber Wilbur Soot. Similar results will also show up when the keyword "husband."

The romance chatbots were first spotted by business news site Quartz last Thursday, Jan. 11, just a day after the store was reported to be open.

OpenAI has yet to respond to the reports of romantic AI companions on its platform. The AI "girlfriends" and "boyfriends" still remain on the GPT Store as of writing.

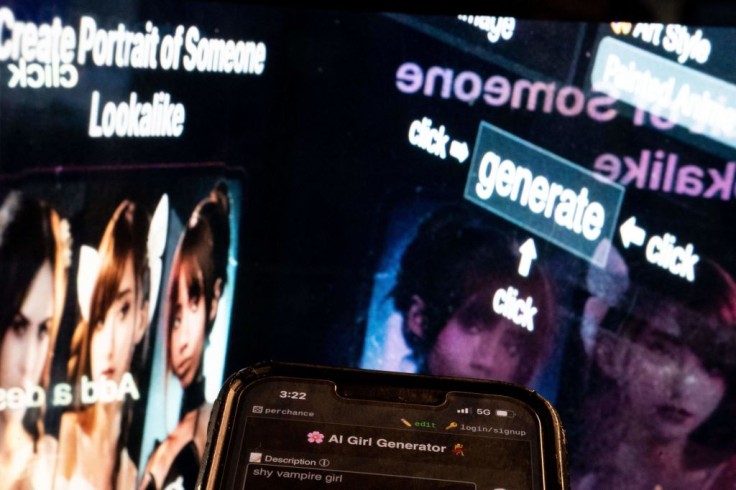

Romantic, NSFW Chatbots Continue to Rise Amid AI Revolutions

OpenAI is not the only AI firm experiencing an influx of romantic and not-safe-for-work chatbots in its platforms.

Since the AI boom last year, relationship chatbots have become widely popular across multiple app platforms.

In the US alone, seven out of the 30 AI chatbots downloaded from Apple and Google Play were some sort of AI friend, girlfriend, or partner, according to Quartz's analysis.

Several online sites like Romantic AI have also launched promoting romantic relationships with AI characters.

While harmless at the surface, experts and legislators express concern that the technology promotes exploitation of the AI to use real-life images of minors for NSFW purposes.

The technology has already been used to generate deepfakes of celebrities, mostly female, for adult videos.

OpenAI Faces Another Controversy Over AI Use

The presence of the relationship chatbots is a headache for OpenAI as the tech startup skirts around government regulations in the United States and the European Union.

Several world leaders and top executives in business sectors have expressed concerns about the growing risk brought by AI, primarily threats of misinformation and data privacy violations.

The continued operation of its users in clear violation of the platform's policies put a dent in OpenAI's management capabilities, especially following its promise to fight disinformation in the upcoming 2024 elections.

US lawmakers are currently pushing a new legislation to require federal agencies and AI platforms to adopt AI guidelines following the Commerce Department's proposals.

Related Article : Silicon Valley Moves to Regulate 'Emerging Risk' of AI